Pi2 Cluster - Docker Swarm

I am currently working on overhauling my network and devices once again, so finally (maybe) I’ll actually get around to producing a commodity cluster, this post focuses on getting docker up and running on the RaspberryPi2

Hardware

- 5 x RasPi2

- 1 x Utilite Pro

Docusing on the Pi2’s here as I’ve not rebuild the utilite at this moment in time.

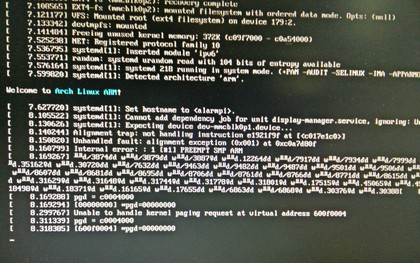

Installing Arch linux

Why are we using Arch and not raspbian? simply because of time constraints, Arch has ARM packages for docker (and openvswitch) and this will save sometime going on.

As I’ll be imaging multiple SD cards I wront a bash script to save some time

This assumes you have allready done the partitioning per the arch installation document

WARNING Make sure you do not blindly use my script, the device paths may be different and you do not want to be wiping out the wrong device.

Installing Docker

pacman -S docker

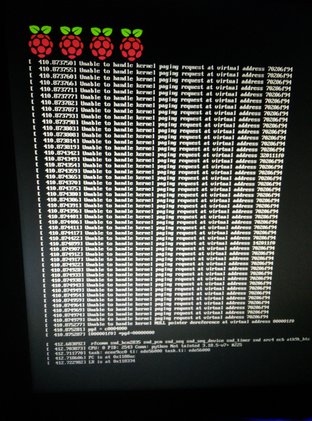

Caveats of docker on ARM

Most docker images are x86 or x86_64 so when you use docker pull and try to docker run you’re going to have a bad time …

1 2 | |

Running swarm without running swarm

The swarm docker images will not run on ARM, so what do we do ?

Simple we build the swarm binary from source

pacman -S golang godep

Check the github readme via the link above to get swarm to compile

Start the swarm

On one node go/bin/swarm create and record the token

Now on every node

1

| |

Now we need to start the manager, this can be on any node or even on a sperate machine such as your laptop / desktop.

1

| |

Check the swarm

Again this can be run from any docker client.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

So there we have it, 20 available ARM cores all running in a docker swarm, seems simple doesn’t it? finding the correct information to make this all work however was a trial in itself.

TODO

- Rebuild utilite-pro, make part of the docker swarm (brining the core count to 24)

- Force docker to use TLS

- Try to get ceph compiling (throwing issues about not finding any high precision timers)

1

| |

- Unanswered userlist quesiton on this issue

- Github pull fixing this issue for PowerPC only

- This blog post gives some hope on finding a fix

1

| |

- Write up notes on getting Logstash 1.5.0 and docker on ARM to play nice together

- Complete setup of openvswitch

- Explore deployment of cuckoo sandbox

- Explore Hadoop components

- Write up notes on distccd setup (this really speeds up compilation time)

- Write up systemd entries for swarm (allow automatic swarm cluster startup on reboot).

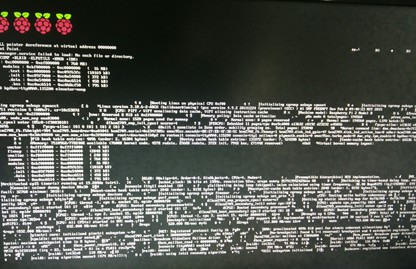

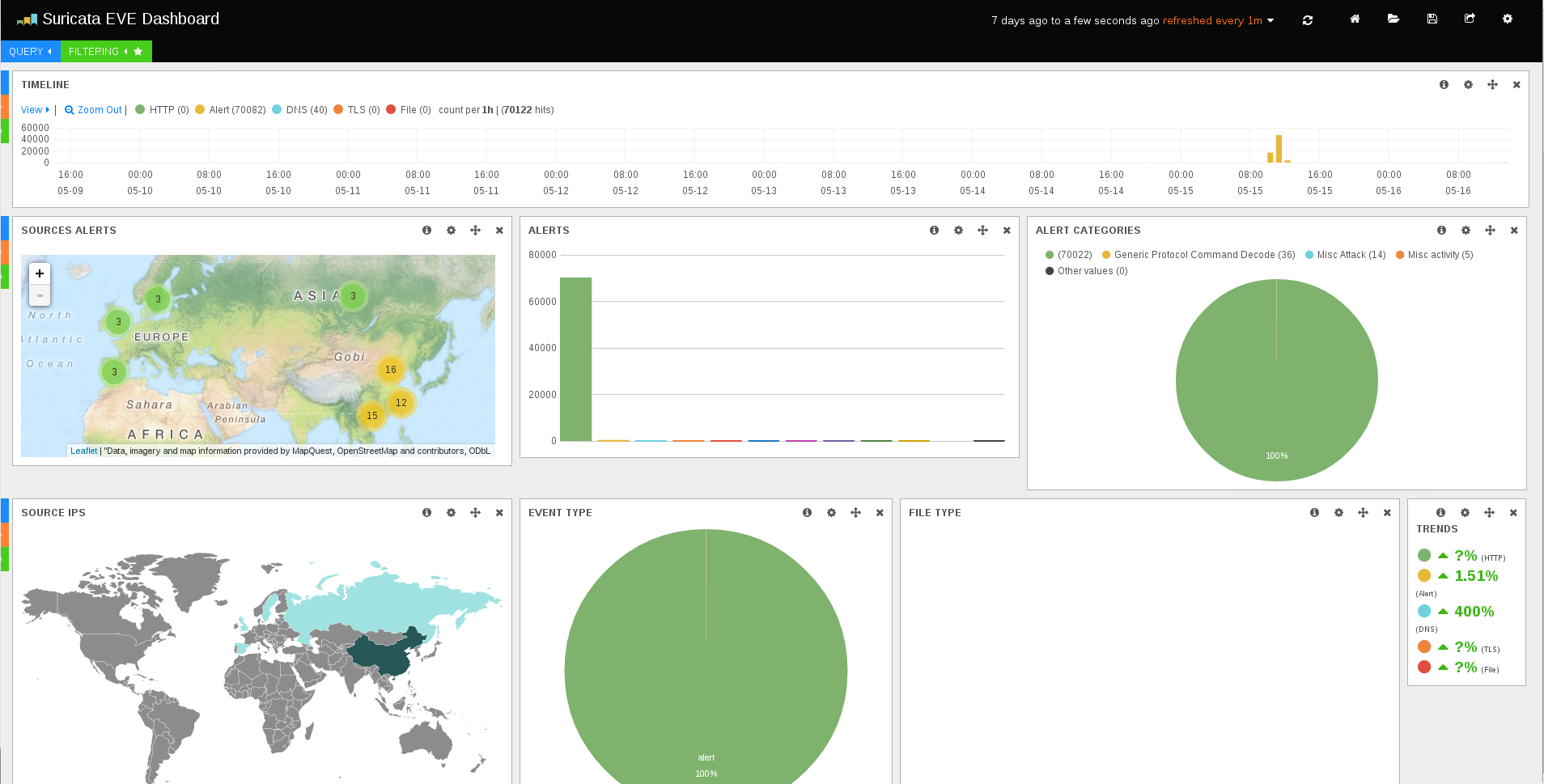

Photos

I’m uploading photos and screenshots of the cluster as progress is made here

Why Pi2?

We can’t all get our hands on a HP moonshot, I debated for some what to use, the Pi2 won out due to

- Price

- Form factor

- No. cores

- Readily available distros and packages

- Readily available accessories (cases, etc..)

- Low power consumption (5 pi2, 1 utilite-pro, mikrotik switch, USB thumbdrives, and USB HD’s, all runnign just under 33 watts)

- ARM architecture